The 2008-ness of running models on webpages

tl;dr: I built a bunch of very silly LLM-in-the-browser experiments at murd.ai. If you have a WebGPU-compatible browser (see below) you can explore them, including the least efficient version of Pong ever.

Amidst all of the frontier model advancements, 2025 has also been the year of increased capabilities for small models. Thanks to NewsArc, I’m quite familiar with what frontier and flash models can do in production, but hadn’t really explored where small models had advanced to.

Coding some toys with Google DeepMind’s Gemma3 was easy, fun, and eye opening. It also brought back memories of Bruce and I coding Walletin and becoming convinced in mid-2008 that the future of the high-performance web had arrived.

Hello, JS performance

For Bruce and me — both longtime game developers and thus “embedded C solves all problems”-thinkers — 2008 was when it became obvious that javascript was going to enable you to build genuinely interesting experiences in the browser. Like a lot of game developers, we’d missed the dot com bubble because — duh — games were the most high-tech, interesting projects you could possibly work on. Plus, you had long hours and low pay. Who would trade that in for some dot com? (Similar moments include “Who would want to be a VC?” to MarcA in 2007, “That doesn’t seem as cool at Linden Dollars” to Ed Felten before his bitcoin lecture at Facebook in 2009, and “No, I need to go do another startup” to Mark Zuckerberg in 2013 — subscribe to my career advice newsletter)

Anyway, compared to the hellscape of web development prior to Firefox (and then Chrome) driving real web standards — see, e.g. jQuery et al — being able to write clean javascript and get the browser to do game-like things was a revelation. By applying the lessons of Second Life and MMOs, we knew we could do something pretty incredible and Walletin was born.

Walletin was, in short, “interactive realtime multi-user photoshop in the browser.” It wasn’t as design focused as Figma — after all, web apps and mobile apps barely existed at this point — but was an infinitely zoomable, interactive canvas that allowed basically all the photoshop actions (thank you, ImageMagic and GhostScript running on the server) using streaming, mipmapping, and a host of game engine tricks to keep it all smoothly interactive. Our demo to Facebook that got us acquired was a shareable, animating presentation that included live demos of foreground object extraction in the browser on an original iPhone.

Part of our presentation to Facebook was the performance trendline, specifically how likely it was that real performance in the browser was coming fast. Then V8 launched and despite many mobile hiccups along the way — and the shortterm need to leverage native — we were off to the races.

Large Language Models WebASM/WebGPU feel exactly like 2008 javascript to me.

What can 1B (or 270M) parameters do for you?

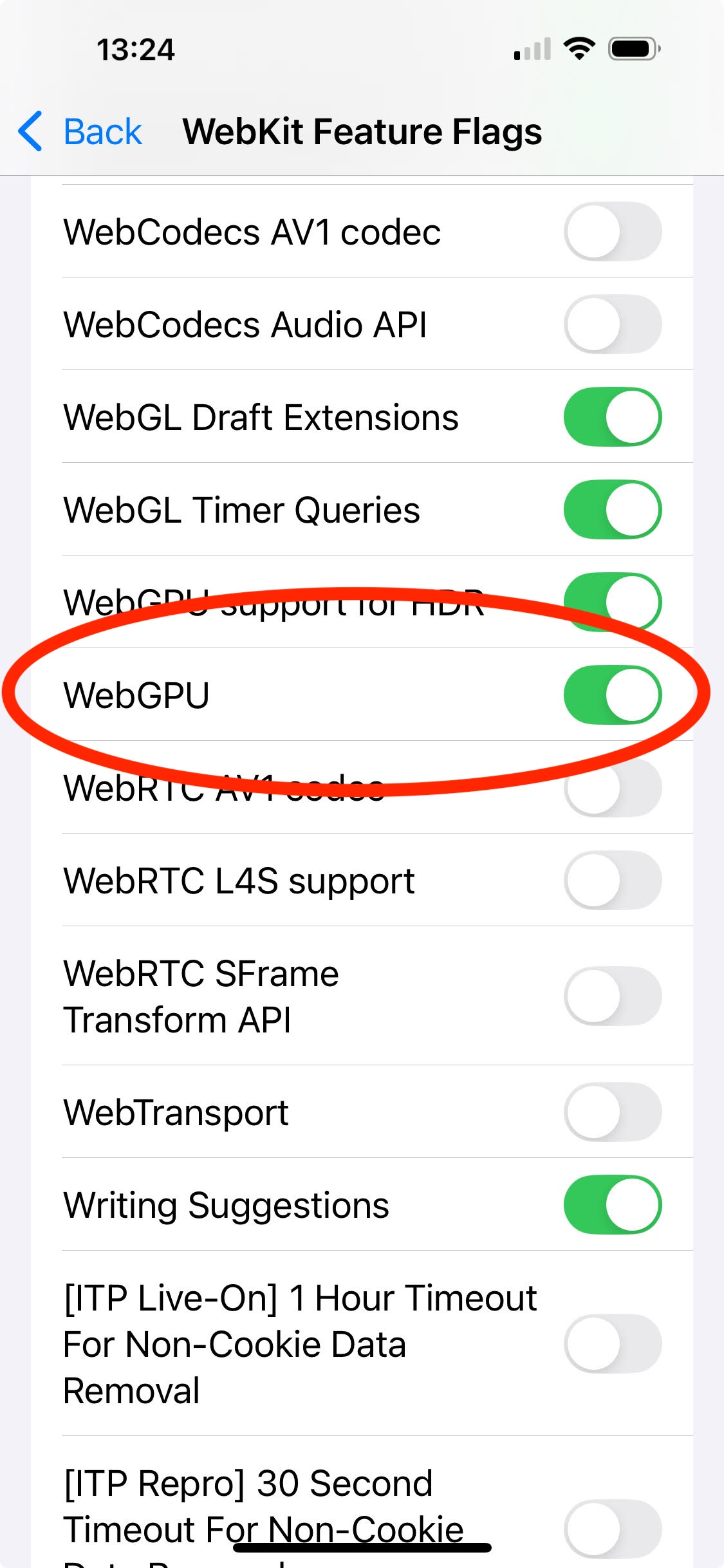

If you have a WebGPU-enabled browser (modern Chrome, modern mobile-Chrome, mobile Safari if you turn on the feature flag in settings) you can go visit murd.ai and see a bunch of very silly LLM-in-browser experiments.

All of the experiments run Gemma3 — the 1B parameter version on desktop or the 270M parameter model on mobile. The model will get downloaded once and then cached via a worker thread so return visits startup much more quickly.

AI Harms Gameshow

Given a scenario with potential AI harms, can you explain the risk and how to avoid it? All the scenarios came from real LLM-related issues that have happened in the last two years. Your answers are judged by Gemma3. It does a surprisingly good job of parsing short answers — despite Gemma’s large context window with larger models, for the embedded versions prompt and answer need to fit in 1000 tokens. Be sure to follow the correct turns prompt structure because otherwise Gemma gets very confused. Otherwise, pretty straight forward. Compared to the reasoning we’ve all gotten used to with larger models, the tiny models are pretty limited and are more sensitive to focusing on the start or the end of the prompt, but overall I was surprised by how well it worked.

AI Versus

Cards Against Humanity, but G-rated and against an AI. Given silly scenarios and even sillier tools, can you argue for a better plan to complete the scenario than the AI? Here, Gemma3 is used both to build the AI’s attempt and to do judging — totally fair, I assure you. Really long arguments tended to regularly lose to the AI — especially with the 270B Gemma3 model — but shorter arguments were judged rather well.

AI Q&A

Ask questions about AI harms. Sort of a more flexible version of the gameshow. Since you can do multiturn conversations, things quickly go off the rails as context gets lost, but the tiny model’s ability to handle synopsizing, paraphrasing, and generally understand free form inputs definitely far outstrips what could have been done with NLP.

MURD Pong

The truly silly one. Tell the AI where the ball is and where its paddle is and ask it what direction to move the paddle. Literally the least efficient way to build pong I think ever attempted. Except… it works. With a modest prompt length, the LLM is more than capable of interactive response rates and unless the Awesome Pong Physics(tm) fails, the LLM will win.

So what did I learn?

It’s pretty easy to get Gemma3 up and running in the browser — much easier than high-performance javascript was in 2008. Moreover, it’s clear that similar local capabilities — whether exported from the browser or the platform — will be coming very quickly and being able to use them to improve inputs, solve weird corner cases NLP struggles with, or generally make funky experiments is going to be awesome.

It also reinforces how quickly devices that don’t have at least halfway decent LLM capabilities are going to get stomped by those that do.