Bootcamp.AI

What if we stopped thinking of coding agents as side panels in editors or asynchronous robots and instead thought of them as Bootcamp?

Boz had a great idea

For all of Boz’s contributions to Meta over the years, I think Bootcamp is probably the most important and impactful. Boz talks tough in the post I linked to, but the Bootcamp experience was wonderful. I’ve been through a lot of company onboardings and Bootcamp was by far the best. They’re hard to create — we still haven’t figured out how to retrofit one at SmartNews, though we are experimenting and hiring.

Key characteristics of Bootcamp

I’ve been out of Meta for more than a decade, but Back in the Day (tm) Bootcamp had some amazing features that helped make it so successful.

- It was a process. Every new engineer went through it and it had specific training, visits with key teams, and reviews of what Meta’s current priorities were.

- Everyone had a mentor who was responsible for extensive code review of everything you wrote. The mentor — in consultation with other mentors — would decide if you were ready to graduate. Most people did, but not everyone, helping to improve the hiring process.

- Anyone could tag a bug as Bootcamp bug. Mentors would review to double check they were appropriate, but within days of being hired you were delivering fixes!

- Bootcampers were a cohort, so we could talk to each other and solve problems together.

So what would coding assistants look like if they were modeled off of Bootcamp?

Bootcamp as a model for AI coding agents

Process

There would be great, reviewable clarity around your goals, process, tooling, stack, etc. This would be optimized for coding agents and regularly tested for improvements or drift as models are updated. This is more than just AGENTS.md — though a good start — but creating systems that ensure:

- Consistent languages for prompts

- Clear separation between AGENTS.md and prompts

- Guidelines for how and when to burn context on code exploration

- When to reset context and try again

- Requirements — not suggestions, Claude! — about testing and coverage per commit

- How often to test alternatives to all of these

- O11y for all of agents’ performance, particularly around rate of positive change delivered to customers

Mentors

Both human and agent-based code reviews of everything agents are producing. Not just for passing tests and performance against company goals, but for style and quirks of the codebase, checks for cheating, and lessons that should be fed back into the process. Treat debates, discussions, and learnings within this group as data. Feed them back into the processes for testing. When testing competing processes and viewing results, record past ideas and tests to ensure the system is making new and interesting mistakes, not repeating old ones.

Bootcamp bugs

There are times when an RFP and full-on design process is the right way for a group to collaborate on a project — that will be another writeup. This system is optimized for all the other moments, when you just want to flag a bug and have a general sense that it will get fixed. For those cases, you don’t want some interactive discussion with your AI. Just file the bug and let the system go solve it. Mentors are there to help ensure it’s the right scale of bug or feature request. After all, for any given model and codebase, there will be tasks that are more or less likely to be solvable by an agent. Mentors — both human and agents — should have reasonable guesses to help optimize what systems are used. If an attempt to solve it fails, then add that to the collective Mentor wisdom and improve how you are assigning bugs.

Cohorts

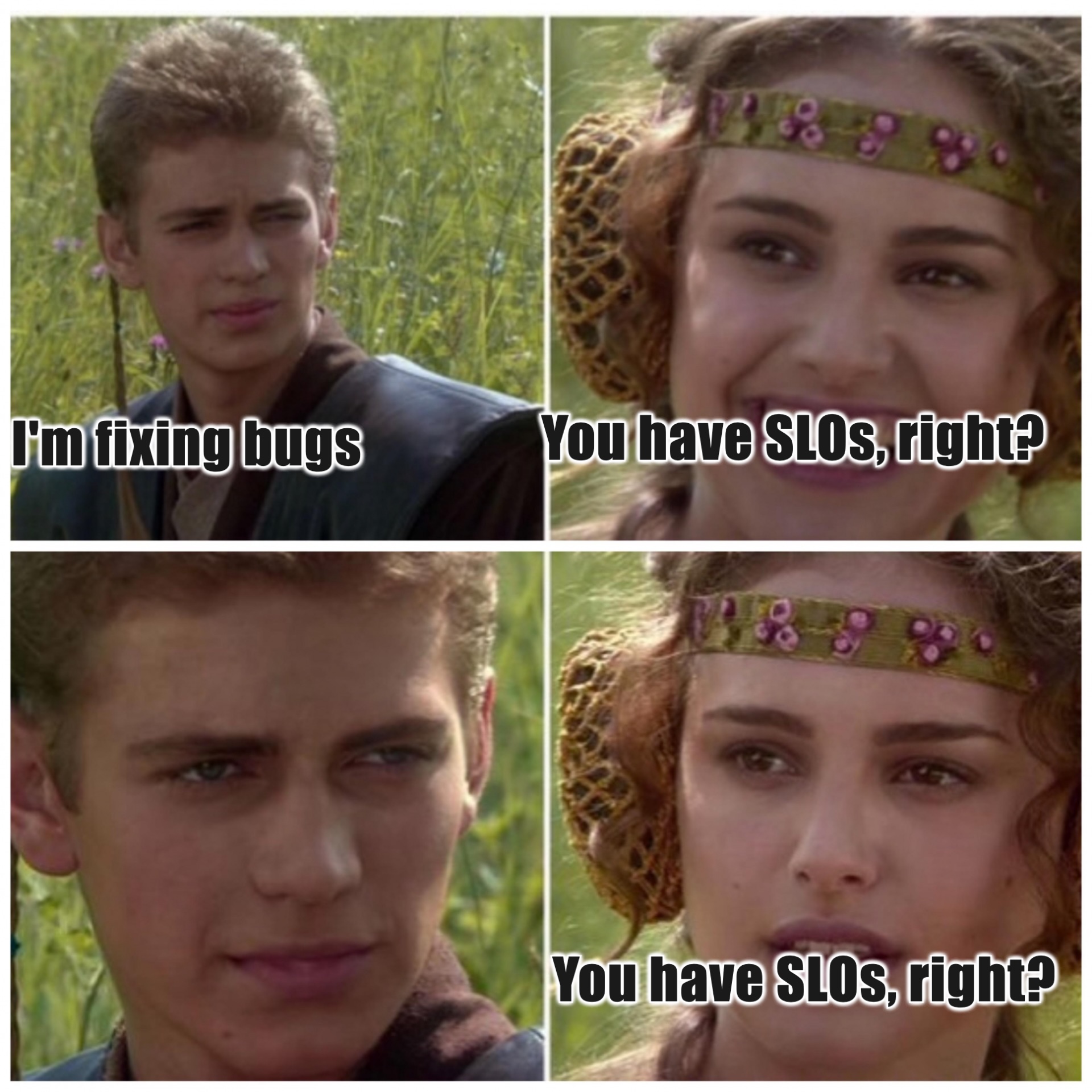

Create cohorts of agents that collectively work on problems, both to compare approaches and results. If your amazing AI agent is 1/100 the cost of a human engineer, why not take 5 or 10 shots at a problem. Like with mentors, optimize for new and interesting mistakes, not repeating the old ones. Given you have SLOs and o11y — plus all the other test coverage the LLMs are pretty darn good at writing — you should have enough signal to be able to choose better solutions. Encode the winning information so that the next agent working on similar bugs or tasks can start with more context.

Not about chatting or interactive

I have more thoughts on this, but note that virtually none of this is interactive. Sure, the mentors will have some feedback on bugs and maybe ask an LLM to explain why it made a decision, but just because LLMs are good at being Chatty McChattersons doesn’t mean that you should build partnered tools that way.

When we tossed a bug to Bootcamp, we could just move on, knowing the machine Boz had created would probably just fix it. We need to build more AI tools that work that way, too.