A Personal Computer History

Nothing like sitting in an airport after a redeye to get me pondering my history with computers. While I’ll defend Atari’s honor — and am bemused by the random twists and turns that brought me there) — my Atari period was actually quite brief — 6th through 9th grade.

And then, Big Education forced me to start using an Apple. Today Macs are my primary computers, but it was a long path to get there.

1985-1988: I can play games during study hall?

I switched schools between freshman and sophomore years of high school (I don’t recommend it) but I quickly found an unexpected win. In 1985, we had a computer lab full of Apple ][+ and //e’s. Despite the limited graphics capabilities of that era Apple, the games were captivating. Games quickly replaced my previous study hall activity of devouring Clive Cussler, Isaac Asimov, and Larry Niven books, but there was a problem: you weren’t allowed to play games on the lab computers.

Unless you were the monitor.

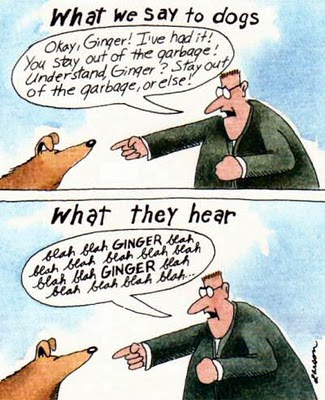

OK, if it meant getting to play games, I can work for The Man. There were some responsibilities involved, though I pretty much was Ginger in this comic — just s/Ginger/You can play games/. Moreover, monitor time also introduced me to the incredible world of writing viruses — did you know you could rename a file on disk to include invisible characters, making it nearly impossible to reference the file? This inspired a later “you shouldn’t have left your computer logged in” campaign at USNA, but that’s a story for another day.

So, for a few years it was Apple at school, Atari at home. Pascal replaced Basic. But you could feel the PCs coming — a few weeks of a summer program introduced me to 8088 assembly. Change was coming.

1988-1995: PC iterations with some VAX VMS flings on the side

Like a lot of universities of the time, USNA at the end of the ‘80’s was in the midst of an oddly split personality. All the serious computing was on VAX mainframes, various flavors of VMS. Using Ada because…reasons. Probably the most useful aspect of smashing into Ada repeatedly — and having it used as a teaching language — was to instill a healthy fear of Object Oriented languages and innoculation against the weird 1998-2002 idea that C++ was a good idea.

VMS at USNA ended up being as much about PvP — e.g. “write hack to auto recursively log on so only you could submit projects on time” — as operating systems lessons. It did mean I had a blind spot to Unix, which was picking up steam in a lot of computer science departments at the time. It was helpful at Sanders, where CombatDF, COBLU, and other systems were running on VMS Pascal (despite the whole Ada thing).

1995-2003: PCs and embedded C

Then I moved to California to build Armageddon. We were developing on PCs but targeting our custom MIPS R5000 + 3Dfx arcade hardware. C, obviously. Like most embedded efforts, step one was to get the Green Hills compiler working for our particular configuration. Best lesson here: if you are late paying suppliers, they send you defective chips.

Pentium Pros and Windows NT was pretty bleeding edge. It was the only configuration that could approximate our hardware streaming animation system, by precaching the decompressed texture library in RAM and only using 2 rotations of the sprites, rather than the actual system’s five.

It was also my introduction to Visual Studio. Thanks to my Unix blind spot, I’d dodged the whole VI/Emacs debate. There were a bunch of different C editors floating around for embedded — and later console development — but Visual Studio felt like a product from the future.

After Acclaim, it was more PC + MIPS for Nintendo N64 development, this time the R4000 plus the N64’s custom R4000 graphics/audio ASIC. Slower and trickier than our arcade hardware — and needed some subtle multithreading to manage the split use of the same silicon for audio and graphics — but still of a piece.

The first 3 years of Second Life continued the trend, though now it was just PC development and an even better version of Visual Studio.

2004-2007: A resurgent Apple

Second Life was used by tons of creative people, so as Apple returned to caring about performance and games (sort of) we decided we needed Second Life to build for both platforms. Fun times, since this was pre-Intel transition, it meant dealing with Endianness, but otherwise was pretty smooth. It also meant living and working on Macs for the first time and it was revelatory. Compared to the Wintel machines of the time, the Macs — and already the Macbooks — were just head-and-shoulders ahead as development tools, except for specific cases where Visual Studio and C were deeply entwined.

2008-today: Once you go web, you never go back (except for the mobile transition and playing games)

Starting with EMI, I transitioned from an embedded C developer to a web developer. It was so easy. There was documentation, communities, tooling that transformed how we all thought about speed and productivity. Sure, you had to make significant performance sacrifies, but in consumer products — where you’re always developing something new and it’s a continuous quest for product-market fit — the tradeoff was an easy one to make.

The web also convincingly won this period because suddenly everything was Chrome or Chromium. While there was still some browser variance, we suddenly had a global platform to target that felt like it had been built by grownups.

Unsurprisingly, Macs — with their underlying BSDness — dominated this period for development. When node.js came on the scene, it even became possible to do what we’d been doing in games forever — write C for your entire stack — except with Javascript. Iteration on Javascript runtimes followed.

And, yes, there were exceptions, like the mobile transition, but the web has been the primary platform I’ve thought about and developed on. My primary computer use throughout the period has been on Macs, with two exceptions.

-

While working at Google I tried to use ChromeOS as much as possible. ChromeBooks were occasionally spectacular, often frustrating. Excited to see the convergence that many of us argued for finally happening

-

Games. During COVID I returned to playing online, PC games. While I also like consoles — I’ve spent a staggering amount of time getting to NG7 in Elden Ring — I really prefer playing shooters and FPSs with a mouse and keyboard. Can’t say enough good things about the smart folks at Puget Systems.

What’s coming next

Everything that made the web a robust platform is doubly true for AI + the web, but that’s a post for another day.